Resolution of Human Eye: Unveiling the Secret

Just like cameras, our eyes capture everything around us, and - after a complicated process - we can see clear, beautiful images.

If our eyes have the same characteristics as cameras, you may wonder what’s the resolution of our eyes. Or perhaps, how many pixels is the human eye? The latest research publications have reached an exciting brand new conclusion, let’s find out together!

How Many Megapixels does the Human Eye Have?

According to the latest research by scientist and photographer, Roger M. N Clark, a screen would have to have a density of 576 megapixels in order to fully encompass our entire field of view. Simply put, an image on a 576-megapixel screen would be the clearest one for us to interpret.

However, the “working process” of our eyes is not the same as a camera. Fundamentally, images are created by our eyes moving rapidly, getting a ton of information. The brain then translates this jigsaw puzzle into an image and then combines it with the information coming in from your other eye to increase the resolution. The truth is, 576 megapixels will likely be too detailed for humans, as your eyes don’t capture all visual information equally.

To measure the resolution people can see, one important aspect is needed: the fovea. The fovea is a small but critical part of the human eye that plays an essential role in our ability to see fine details and perceive color. People only digest the information in the fovea and about 7 megapixels in our fovea range can be seen. It's been roughly estimated that the rest of our field of view would only need an additional 1 megapixel’s worth of information to render an image.

How to Calculate Resolution of the Human Eye

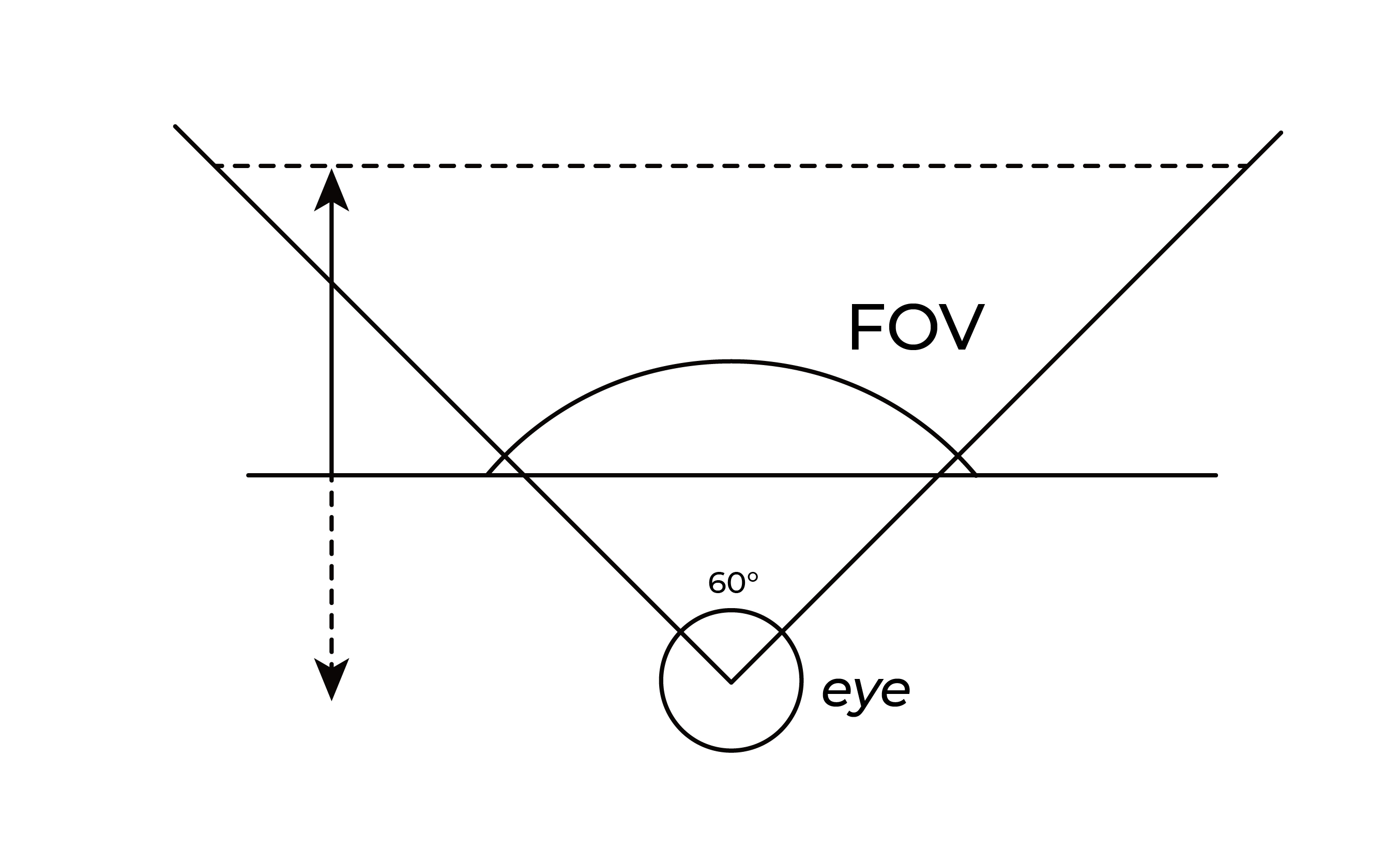

Pictures and images are in different sizes and resolution is counted by multiplying their vertical and horizontal megapixels. However, human eyes can only move about 180 degrees. So, here’s the formula for how to calculate the highest resolution that human eyes can see.

Suppose that the view in front of human eyes is 90 degrees by 90 degrees, like looking through a window. The number of megapixels would be:

90 degrees * 60 arc-minutes/degree * 1/0.3 * 90 * 60 * 1/0.3 = 324,000,000 pixels (324 megapixels)

Arc-minute: Also known as the minute of arc, is a unit of angular measurement equal to 1/60 of 1 degree.

In one moment, your eyes definitely can’t capture that many megapixels, however, to see more details your eyes will move around. Human eyes have a large field of view - up to 180 degrees. In order to make the calculation more accurate, scientists choose to be conservative and consider the average field of view of a human eye to be 120 degrees, so the formula is as follows:

120 * 120 * 60 * 60 / (0.3 * 0.3) = 576 megapixels

0.3: We use the value 0.3 as it’s the threshold value of low vision or visual impairment.

News & Latest Researches

The research field behind understanding how the human eye works is constantly evolving. From using AI to spot epileptic signs to discovering that the makeup of our eyes isn’t actually unique to humans, to recreating the exact way our eyes see and interpret images, it’s an exciting time to be in this research field.

Here are some of the latest findings or news.

● InceptiveMind May 26, 2023. Penn State has developed a new device that creates images by mimicking the human eye’s red, green, and blue cone cells and neural networks.

●Fstoppers Mar. 31, 2023. Experts in the film industry have found that the human eye doesn’t really have a frame rate, but for practical intents and purposes, it is about 10 fps.

● ASU News Mar.13, 2023. In fact, one in six chimpanzees in the study was found to have the same characteristics that are present in the whites of a human eye.

● News Atlas Feb.17, 2023. Researchers have started using AI technology called MoSeq (or Motion Sequencing) to analyze the behavior of epileptic mice. Currently, these behavioral "fingerprints' can go unnoticed by the human eye, so it turns out that AI outperforms the human eye in spotting epileptic behavior in mice.

The Process of Human Vision

To see the world clearly, our brain processes the visual information captured by both our eyes. But how do our eyes gather all the visual information? In this section, we’ll focus on the whole process of human vision.

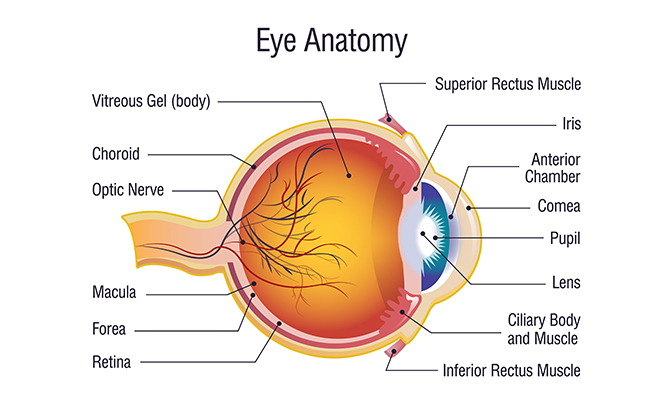

There are a ton of complicated processes that go into creating a visual image. Simultaneously, your eyes and brain have to be talking to each other through a network of neurons, receptors, and other specialized cells. The human eye contains a number of optical parts, including the cornea, iris, pupil, variable-focus lens, and retina. These parts work together to create the images in a person's field of view.

When we look at something, it’s first focused through the cornea and lens onto the retina, a multilayered membrane containing millions of light-sensitive cells that detect the image and convert it into a sequence of electrical signals.

The retina's image-capturing receptors are broken down into rods and cones. Linked to the fibers of the optic nerve bundle via a series of specialized cells, the retina coordinates the transmission of electrical signals to the brain.

At last, in the brain, the optic nerves from both eyes unite at the optic chiasma, where information from both retinas is linked. The visual information is then processed in a series of steps before reaching the visual cortex. After all that, we can see and interpret what’s right in front of us!

Factors that Affect Human Vision

The process of human vision is kind of complicated and after years of investigation, humans only really understand the basics. Scientists have found some factors that may affect human vision, but there are still plenty more to be discovered.

Focal length

The focal length of an optical system measures how strongly the system converges or diverges light.

The human eye has a focal length of approximately 22mm, but unlike the focal length of a camera lens which measures the distance from the lens to its sensor, the back of the human eye is curved. This means that the periphery of a human's visual field gets progressively less detailed than the center. Add in the fact that the perceived image is the combined result of both eyes, and we end up with an average focal length that’s between 17mm and 22mm.

Angle of view

The angle of view defines the sizes of items or scenes in front of the eyes. Each eye has a 120-200° angle of view, depending on how tightly things are "seen." Also, the overlapping area between eyes is around 130° wide – nearly as wide as a fisheye lens. Our perception is largely influenced by our central angle of vision, which ranges between 40 and 60 degrees.

The wider our angle of view, the more resolution can be captured by our eyes. However, if the angle is too broad, the sizes of items become exaggerated. On the flip side, too narrow an angle of view distorts the sizes of objects and eliminates the depth of what we can see. To make us see the perfect scene, our eyes will move around to capture what we want most.

Asymmetry

To evaluate whether a picture is good or not, experts use the term "symmetry" to describe the balance between each side of an image.

Cameras record images in an almost perfectly symmetrical way. However, each eye is more capable of perceiving detail below our line of sight than above, and our peripheral vision is also much more sensitive in directions away from the nose than toward the center. So what we see from our two eyes may not be similar and can’t be measured symmetrically.

Subtle Gradations

Slowly, light becomes dark, big becomes small, and colors blend together. These are all examples of subtle gradation. Any visual element—whether be it size, shape, direction, edges, value, hue, intensity, temperature, or texture—can be graded just as they can be contrasted.

Enlarged detail might actually become less visible to our eyes when viewing from a distance. Cameras have the zoom functionality to focus on a distant item but human eyes don’t! The capability of subtle gradation of human eyes is weaker than cameras, which means the distance that human vision can reach is relatively limited.

Dynamic range

When we’re talking about cameras, the dynamic range describes the ratio between the brightest and darkest parts of an image. To our eyes, dynamic range shows how much contrast we can see. The human eye's dynamic range is much wider than a camera's.

According to different lighting conditions, dynamic range can adjust constantly, which means that we’re not only ‘exposed’ to the bright or the dark areas of the scene, but we can contrast between different lighting due to our rapid eye movements. Our eyes are always moving, allowing us to measure the light in all parts of the scene.

Sensitivity

The sensitivity describes the ability of optical devices to resolve very faint or fast-moving subjects. Sensitivity levels are measured on the ISO scale, with small number meaning low sensitivity and large number meaning high sensitivity. The most common ISO values are 100, 200, 400, 800, 1600, and 3000. Typically, the lowest value of ISO setting is 100.

During the day, the eye is much less sensitive and is about the ISO equivalent of 1, which means in the daytime human eyes aren't sensitive to fast-moving objects. However, at low light levels, human eyes can become more sensitive, but it takes longer for us to piece together moving objects at night.

Human Eye vs Camera: Can They Compare Together?

Human eyes and cameras can be compared due to their common ability to capture visual information. Let’s dive into their similarities and differences.

Similarities between Human Eyes and Cameras

Both the eye and the camera have lenses and light-sensitive surfaces. The iris regulates the amount of light that enters your eye. Your lens aids in focusing the light. The retina is a light-sensitive surface located in the rear of the eye which then sends impulses to your brain via the optic nerve. Finally, your brain interprets what you observe.

This is similar to what happens when a camera takes a photograph. The light first strikes the surface of the camera's lens. Then the aperture feature regulates the amount of light that enters the camera. The light then travels to a light-sensitive surface. After a long time of processing, this surface can form the film of the camera or onto an imaging sensor chip in a digital camera.

Another thing that retinas, film, and imaging sensor chips have in common is that they’re all given an image that has been inverted and turned upside down. Since both an eye and a camera lens are convex, or curved outwards, light refracts as it strikes a convex object. This causes the image to be flipped upside down.

When seeing images or watching movies, you’re not registering that it’s upside down and this is because your brain intervenes to assist your eyes. It understands that the world is supposed to be right side up. As a result, the image is flipped again. Digital cameras are programmed to adjust themselves automatically. A prism or mirror in non-digital cameras flips the image so that it looks right side up.

Differences between Human Eyes and Cameras

The three main major differences between human eyes and cameras lie in how they focus on objects, how they process different colors, and what they can see in a scene.

Human eyes stay focused on moving objects by changing the shape of the lens inside. At the same time, the thickness of the lens also changes to accommodate the visual information being seen. The reason why a lens can do this is that it’s attached to the muscle of the eyes that contract and relax.

A camera lens doesn’t have this ability so photographers have to change lenses according to the distance between themselves and the object. Mechanical parts in the camera lens are also needed to be adjusted to stay focused on a moving object.

The process of seeing different colors depends on the cones located in the center of your eye’s retina. Cones let you see in color and there are three types of cones. Each type responds to different wavelengths of light. Red cones respond to long wavelengths. Blue cones respond to short wavelengths. Green cones respond to medium wavelengths. The way cameras respond to red, blue, and green light is by using filters placed on top of their photoreceptors.

Finally, the contents that cameras and human eyes capture can be different. Cameras have photoreceptors all over its lens so they often can capture a full picture. However, human eyes have a blind spot, where the optic nerve connects to the retina. Sometimes people don’t notice the blind spot because our brain will complete the spot using what the other eye has captured when watching the same image. What’s more, our eyes can move around fast and freely while fixed cameras can’t do that.

Recommended High Megapixel Security Camera - Reolink Duo 3 PoE

Reolink Duo 3 PoE is an advanced security camera with a groundbreaking 16MP Ultra High Definition resolution, ensuring crystal-clear images. Its 180° panoramic view minimizes blind spots, providing comprehensive coverage. Equipped with motion tracking technology, it intelligently follows moving objects for enhanced surveillance. Plus, its color night vision captures vivid images in low-light conditions, ensuring round-the-clock security monitoring.

Groundbreaking 16MP Dual-Lens PoE Camera

16MP UHD, Dual-Lens, Motion Track, 180° Wide Viewing Angle, Power over Ethernet, Color Night Vision.

FAQs

How many pixels is the human eye?

According to the latest research result from scientist and photographer, Roger M. N Clark, a screen would have to have a density of 576 megapixels in order to encompass our entire field of view. Simply put, an image on a 576-pixel screen would be the clearest one for us to interpret.

Can a human eye see 4K or 8K?

Yes, human eyes can fully enjoy the clarity of 4K and 8K resolution. However, it’s hard for human eyes to compare between the two. Your eyes can tell the difference between 8K and 4K resolution, but only if you have high visual acuity, or if you're extremely close to the screen.

What is the highest eyesight recorded?

The highest eyesight recorded for humans is 20/5 vision while the average person only has a visual acuity of 20/20. A score of 20/5 means you can see things at 20 feet most people can't see until they are standing 5 feet away.

Conclusion

The process of human vision is complicated and it’s a team effort between our eyes and brain. There are several factors that affect human vision, like focal length, dynamic range, sensitivity, etc.

Many people compare human eyes to cameras as they all capture images using different lighting conditions and logically they can be put together, even if there are some major differences. So, are you ever curious about the highest resolution of human eyes? Do you think that our eyes work like a camera? Share this article with your friends and leave a comment below, let’s discuss it together!

Search

Be in the Know

Security insights & offers right into your inbox